Publications in 2022

Here is a list of all papers from 2022. Kudos to all my collaborators. Well-done

Erik Wijmans, Irfan Essa, Dhruv Batra

How to Train PointGoal Navigation Agents on a (Sample and Compute) Budget Proceedings Article

In: International Conference on Autonomous Agents and Multi-Agent Systems, 2022.

Abstract | Links | BibTeX | Tags: computer vision, embodied agents, navigation

@inproceedings{2022-Wijmans-TPNASCB,

title = {How to Train PointGoal Navigation Agents on a (Sample and Compute) Budget},

author = {Erik Wijmans and Irfan Essa and Dhruv Batra},

url = {https://arxiv.org/abs/2012.06117

https://ifaamas.org/Proceedings/aamas2022/pdfs/p1762.pdf},

doi = {10.48550/arXiv.2012.06117},

year = {2022},

date = {2022-12-01},

urldate = {2020-12-01},

booktitle = {International Conference on Autonomous Agents and Multi-Agent Systems},

journal = {arXiv},

number = {arXiv:2012.06117},

abstract = {PointGoal navigation has seen significant recent interest and progress, spurred on by the Habitat platform and associated challenge. In this paper, we study PointGoal navigation under both a sample budget (75 million frames) and a compute budget (1 GPU for 1 day). We conduct an extensive set of experiments, cumulatively totaling over 50,000 GPU-hours, that let us identify and discuss a number of ostensibly minor but significant design choices -- the advantage estimation procedure (a key component in training), visual encoder architecture, and a seemingly minor hyper-parameter change. Overall, these design choices to lead considerable and consistent improvements over the baselines present in Savva et al. Under a sample budget, performance for RGB-D agents improves 8 SPL on Gibson (14% relative improvement) and 20 SPL on Matterport3D (38% relative improvement). Under a compute budget, performance for RGB-D agents improves by 19 SPL on Gibson (32% relative improvement) and 35 SPL on Matterport3D (220% relative improvement). We hope our findings and recommendations will make serve to make the community's experiments more efficient.},

keywords = {computer vision, embodied agents, navigation},

pubstate = {published},

tppubtype = {inproceedings}

}

Erik Wijmans, Irfan Essa, Dhruv Batra

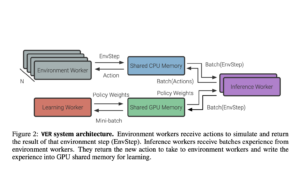

VER: Scaling On-Policy RL Leads to the Emergence of Navigation in Embodied Rearrangement Proceedings Article

In: Oh, Alice H., Agarwal, Alekh, Belgrave, Danielle, Cho, Kyunghyun (Ed.): Advances in Neural Information Processing Systems (NeurIPS), 2022.

Abstract | Links | BibTeX | Tags: machine learning, NeurIPS, reinforcement learning, robotics

@inproceedings{2022-Wijmans-SOLENER,

title = {VER: Scaling On-Policy RL Leads to the Emergence of Navigation in Embodied Rearrangement},

author = {Erik Wijmans and Irfan Essa and Dhruv Batra},

editor = {Alice H. Oh and Alekh Agarwal and Danielle Belgrave and Kyunghyun Cho},

url = {https://arxiv.org/abs/2210.05064

https://openreview.net/forum?id=VrJWseIN98},

doi = {10.48550/ARXIV.2210.05064},

year = {2022},

date = {2022-12-01},

urldate = {2022-12-01},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

abstract = {We present Variable Experience Rollout (VER), a technique for efficiently scaling batched on-policy reinforcement learning in heterogenous environments (where different environments take vastly different times to generate rollouts) to many GPUs residing on, potentially, many machines. VER combines the strengths of and blurs the line between synchronous and asynchronous on-policy RL methods (SyncOnRL and AsyncOnRL, respectively). Specifically, it learns from on-policy experience (like SyncOnRL) and has no synchronization points (like AsyncOnRL) enabling high throughput.

We find that VER leads to significant and consistent speed-ups across a broad range of embodied navigation and mobile manipulation tasks in photorealistic 3D simulation environments. Specifically, for PointGoal navigation and ObjectGoal navigation in Habitat 1.0, VER is 60-100% faster (1.6-2x speedup) than DD-PPO, the current state of art for distributed SyncOnRL, with similar sample efficiency. For mobile manipulation tasks (open fridge/cabinet, pick/place objects) in Habitat 2.0 VER is 150% faster (2.5x speedup) on 1 GPU and 170% faster (2.7x speedup) on 8 GPUs than DD-PPO. Compared to SampleFactory (the current state-of-the-art AsyncOnRL), VER matches its speed on 1 GPU, and is 70% faster (1.7x speedup) on 8 GPUs with better sample efficiency.

We leverage these speed-ups to train chained skills for GeometricGoal rearrangement tasks in the Home Assistant Benchmark (HAB). We find a surprising emergence of navigation in skills that do not ostensible require any navigation. Specifically, the Pick skill involves a robot picking an object from a table. During training the robot was always spawned close to the table and never needed to navigate. However, we find that if base movement is part of the action space, the robot learns to navigate then pick an object in new environments with 50% success, demonstrating surprisingly high out-of-distribution generalization.},

keywords = {machine learning, NeurIPS, reinforcement learning, robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

We find that VER leads to significant and consistent speed-ups across a broad range of embodied navigation and mobile manipulation tasks in photorealistic 3D simulation environments. Specifically, for PointGoal navigation and ObjectGoal navigation in Habitat 1.0, VER is 60-100% faster (1.6-2x speedup) than DD-PPO, the current state of art for distributed SyncOnRL, with similar sample efficiency. For mobile manipulation tasks (open fridge/cabinet, pick/place objects) in Habitat 2.0 VER is 150% faster (2.5x speedup) on 1 GPU and 170% faster (2.7x speedup) on 8 GPUs than DD-PPO. Compared to SampleFactory (the current state-of-the-art AsyncOnRL), VER matches its speed on 1 GPU, and is 70% faster (1.7x speedup) on 8 GPUs with better sample efficiency.

We leverage these speed-ups to train chained skills for GeometricGoal rearrangement tasks in the Home Assistant Benchmark (HAB). We find a surprising emergence of navigation in skills that do not ostensible require any navigation. Specifically, the Pick skill involves a robot picking an object from a table. During training the robot was always spawned close to the table and never needed to navigate. However, we find that if base movement is part of the action space, the robot learns to navigate then pick an object in new environments with 50% success, demonstrating surprisingly high out-of-distribution generalization.

Huda Alamri, Anthony Bilic, Michael Hu, Apoorva Beedu, Irfan Essa

End-to-end Multimodal Representation Learning for Video Dialog Proceedings Article

In: NeuRIPS Workshop on Vision Transformers: Theory and applications, 2022.

Abstract | Links | BibTeX | Tags: computational video, computer vision, vision transformers

@inproceedings{2022-Alamri-EMRLVD,

title = {End-to-end Multimodal Representation Learning for Video Dialog},

author = {Huda Alamri and Anthony Bilic and Michael Hu and Apoorva Beedu and Irfan Essa},

url = {https://arxiv.org/abs/2210.14512},

doi = {10.48550/arXiv.2210.14512},

year = {2022},

date = {2022-12-01},

urldate = {2022-12-01},

booktitle = {NeuRIPS Workshop on Vision Transformers: Theory and applications},

abstract = {Video-based dialog task is a challenging multimodal learning task that has received increasing attention over the past few years with state-of-the-art obtaining new performance records. This progress is largely powered by the adaptation of the more powerful transformer-based language encoders. Despite this progress, existing approaches do not effectively utilize visual features to help solve tasks. Recent studies show that state-of-the-art models are biased towards textual information rather than visual cues. In order to better leverage the available visual information, this study proposes a new framework that combines 3D-CNN network and transformer-based networks into a single visual encoder to extract more robust semantic representations from videos. The visual encoder is jointly trained end-to-end with other input modalities such as text and audio. Experiments on the AVSD task show significant improvement over baselines in both generative and retrieval tasks.},

keywords = {computational video, computer vision, vision transformers},

pubstate = {published},

tppubtype = {inproceedings}

}

Apoorva Beedu, Huda Alamri, Irfan Essa

Video based Object 6D Pose Estimation using Transformers Proceedings Article

In: NeuRIPS Workshop on Vision Transformers: Theory and applications, 2022.

Abstract | Links | BibTeX | Tags: computer vision, vision transformers

@inproceedings{2022-Beedu-VBOPEUT,

title = {Video based Object 6D Pose Estimation using Transformers},

author = {Apoorva Beedu and Huda Alamri and Irfan Essa},

url = {https://arxiv.org/abs/2210.13540},

doi = {10.48550/arXiv.2210.13540},

year = {2022},

date = {2022-12-01},

urldate = {2022-12-01},

booktitle = {NeuRIPS Workshop on Vision Transformers: Theory and applications},

abstract = {We introduce a Transformer based 6D Object Pose Estimation framework VideoPose, comprising an end-to-end attention based modelling architecture, that attends to previous frames in order to estimate accurate 6D Object Poses in videos. Our approach leverages the temporal information from a video sequence for pose refinement, along with being computationally efficient and robust. Compared to existing methods, our architecture is able to capture and reason from long-range dependencies efficiently, thus iteratively refining over video sequences.Experimental evaluation on the YCB-Video dataset shows that our approach is on par with the state-of-the-art Transformer methods, and performs significantly better relative to CNN based approaches. Further, with a speed of 33 fps, it is also more efficient and therefore applicable to a variety of applications that require real-time object pose estimation. Training code and pretrained models are available at https://anonymous.4open.science/r/VideoPose-3C8C.},

keywords = {computer vision, vision transformers},

pubstate = {published},

tppubtype = {inproceedings}

}

José Lezama, Huiwen Chang, Lu Jiang, Irfan Essa

Improved Masked Image Generation with Token-Critic Proceedings Article

In: European Conference on Computer Vision (ECCV), arXiv, 2022, ISBN: 978-3-031-20050-2.

Abstract | Links | BibTeX | Tags: computer vision, ECCV, generative AI, generative media, google

@inproceedings{2022-Lezama-IMIGWT,

title = {Improved Masked Image Generation with Token-Critic},

author = {José Lezama and Huiwen Chang and Lu Jiang and Irfan Essa},

url = {https://arxiv.org/abs/2209.04439

https://rdcu.be/c61MZ},

doi = {10.1007/978-3-031-20050-2_5},

isbn = {978-3-031-20050-2},

year = {2022},

date = {2022-10-28},

urldate = {2022-10-28},

booktitle = {European Conference on Computer Vision (ECCV)},

volume = {13683},

publisher = {arXiv},

abstract = {Non-autoregressive generative transformers recently demonstrated impressive image generation performance, and orders of magnitude faster sampling than their autoregressive counterparts. However, optimal parallel sampling from the true joint distribution of visual tokens remains an open challenge. In this paper we introduce Token-Critic, an auxiliary model to guide the sampling of a non-autoregressive generative transformer. Given a masked-and-reconstructed real image, the Token-Critic model is trained to distinguish which visual tokens belong to the original image and which were sampled by the generative transformer. During non-autoregressive iterative sampling, Token-Critic is used to select which tokens to accept and which to reject and resample. Coupled with Token-Critic, a state-of-the-art generative transformer significantly improves its performance, and outperforms recent diffusion models and GANs in terms of the trade-off between generated image quality and diversity, in the challenging class-conditional ImageNet generation.},

keywords = {computer vision, ECCV, generative AI, generative media, google},

pubstate = {published},

tppubtype = {inproceedings}

}

Xiang Kong, Lu Jiang, Huiwen Chang, Han Zhang, Yuan Hao, Haifeng Gong, Irfan Essa

BLT: Bidirectional Layout Transformer for Controllable Layout Generation Proceedings Article

In: European Conference on Computer Vision (ECCV), 2022, ISBN: 978-3-031-19789-5.

Abstract | Links | BibTeX | Tags: computer vision, ECCV, generative AI, generative media, google, vision transformer

@inproceedings{2022-Kong-BLTCLG,

title = {BLT: Bidirectional Layout Transformer for Controllable Layout Generation},

author = {Xiang Kong and Lu Jiang and Huiwen Chang and Han Zhang and Yuan Hao and Haifeng Gong and Irfan Essa},

url = {https://arxiv.org/abs/2112.05112

https://rdcu.be/c61AE},

doi = {10.1007/978-3-031-19790-1_29},

isbn = {978-3-031-19789-5},

year = {2022},

date = {2022-10-25},

urldate = {2022-10-25},

booktitle = {European Conference on Computer Vision (ECCV)},

volume = {13677},

abstract = {Creating visual layouts is a critical step in graphic design. Automatic generation of such layouts is essential for scalable and diverse visual designs. To advance conditional layout generation, we introduce BLT, a bidirectional layout transformer. BLT differs from previous work on transformers in adopting non-autoregressive transformers. In training, BLT learns to predict the masked attributes by attending to surrounding attributes in two directions. During inference, BLT first generates a draft layout from the input and then iteratively refines it into a high-quality layout by masking out low-confident attributes. The masks generated in both training and inference are controlled by a new hierarchical sampling policy. We verify the proposed model on six benchmarks of diverse design tasks. Experimental results demonstrate two benefits compared to the state-of-the-art layout transformer models. First, our model empowers layout transformers to fulfill controllable layout generation. Second, it achieves up to 10x speedup in generating a layout at inference time than the layout transformer baseline. Code is released at https://shawnkx.github.io/blt.},

keywords = {computer vision, ECCV, generative AI, generative media, google, vision transformer},

pubstate = {published},

tppubtype = {inproceedings}

}

Peggy Chi, Tao Dong, Christian Frueh, Brian Colonna, Vivek Kwatra, Irfan Essa

Synthesis-Assisted Video Prototyping From a Document Proceedings Article

In: Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, pp. 1–10, 2022.

Abstract | Links | BibTeX | Tags: computational video, generative media, google, human-computer interaction, UIST, video editing

@inproceedings{2022-Chi-SVPFD,

title = {Synthesis-Assisted Video Prototyping From a Document},

author = {Peggy Chi and Tao Dong and Christian Frueh and Brian Colonna and Vivek Kwatra and Irfan Essa},

url = {https://research.google/pubs/pub51631/

https://dl.acm.org/doi/abs/10.1145/3526113.3545676},

doi = {10.1145/3526113.3545676},

year = {2022},

date = {2022-10-01},

urldate = {2022-10-01},

booktitle = {Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology},

pages = {1--10},

abstract = {Video productions commonly start with a script, especially for talking head videos that feature a speaker narrating to the camera. When the source materials come from a written document -- such as a web tutorial, it takes iterations to refine content from a text article to a spoken dialogue, while considering visual compositions in each scene. We propose Doc2Video, a video prototyping approach that converts a document to interactive scripting with a preview of synthetic talking head videos. Our pipeline decomposes a source document into a series of scenes, each automatically creating a synthesized video of a virtual instructor. Designed for a specific domain -- programming cookbooks, we apply visual elements from the source document, such as a keyword, a code snippet or a screenshot, in suitable layouts. Users edit narration sentences, break or combine sections, and modify visuals to prototype a video in our Editing UI. We evaluated our pipeline with public programming cookbooks. Feedback from professional creators shows that our method provided a reasonable starting point to engage them in interactive scripting for a narrated instructional video.},

keywords = {computational video, generative media, google, human-computer interaction, UIST, video editing},

pubstate = {published},

tppubtype = {inproceedings}

}

Harish Haresamudram, Irfan Essa, Thomas Ploetz

Assessing the State of Self-Supervised Human Activity Recognition using Wearables Journal Article

In: Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), vol. 6, iss. 3, no. 116, pp. 1–47, 2022.

Abstract | Links | BibTeX | Tags: activity recognition, IMWUT, ubiquitous computing, wearable computing

@article{2022-Haresamudram-ASSHARUW,

title = {Assessing the State of Self-Supervised Human Activity Recognition using Wearables},

author = {Harish Haresamudram and Irfan Essa and Thomas Ploetz},

url = {https://dl.acm.org/doi/10.1145/3550299

https://arxiv.org/abs/2202.12938

https://arxiv.org/pdf/2202.12938

},

doi = {doi.org/10.1145/3550299},

year = {2022},

date = {2022-09-07},

urldate = {2022-09-07},

booktitle = {Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT)},

journal = {Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT)},

volume = {6},

number = {116},

issue = {3},

pages = {1–47},

publisher = {ACM},

abstract = {The emergence of self-supervised learning in the field of wearables-based human activity recognition (HAR) has opened up opportunities to tackle the most pressing challenges in the field, namely to exploit unlabeled data to derive reliable recognition systems for scenarios where only small amounts of labeled training samples can be collected. As such, self-supervision, i.e., the paradigm of 'pretrain-then-finetune' has the potential to become a strong alternative to the predominant end-to-end training approaches, let alone hand-crafted features for the classic activity recognition chain. Recently a number of contributions have been made that introduced self-supervised learning into the field of HAR, including, Multi-task self-supervision, Masked Reconstruction, CPC, and SimCLR, to name but a few. With the initial success of these methods, the time has come for a systematic inventory and analysis of the potential self-supervised learning has for the field. This paper provides exactly that. We assess the progress of self-supervised HAR research by introducing a framework that performs a multi-faceted exploration of model performance. We organize the framework into three dimensions, each containing three constituent criteria, such that each dimension captures specific aspects of performance, including the robustness to differing source and target conditions, the influence of dataset characteristics, and the feature space characteristics. We utilize this framework to assess seven state-of-the-art self-supervised methods for HAR, leading to the formulation of insights into the properties of these techniques and to establish their value towards learning representations for diverse scenarios.

},

keywords = {activity recognition, IMWUT, ubiquitous computing, wearable computing},

pubstate = {published},

tppubtype = {article}

}

Daniel Nkemelu, Harshil Shah, Irfan Essa, Michael L. Best

Tackling Hate Speech in Low-resource Languages with Context Experts Proceedings Article

In: International Conference on Information & Communication Technologies and Development (ICTD), 2022.

Abstract | Links | BibTeX | Tags: computational journalism, ICTD, social computing

@inproceedings{2022-Nkemelu-THSLLWCE,

title = {Tackling Hate Speech in Low-resource Languages with Context Experts},

author = {Daniel Nkemelu and Harshil Shah and Irfan Essa and Michael L. Best},

url = {https://www.nkemelu.com/data/ictd2022_nkemelu_final.pdf

},

year = {2022},

date = {2022-06-01},

urldate = {2022-06-01},

booktitle = {International Conference on Information & Communication Technologies and Development (ICTD)},

abstract = {Given Myanmar's historical and socio-political context, hate speech spread on social media have escalated into offline unrest and violence. This paper presents findings from our remote study on the automatic detection of hate speech online in Myanmar. We argue that effectively addressing this problem will require community-based approaches that combine the knowledge of context experts with machine learning tools that can analyze the vast amount of data produced. To this end, we develop a systematic process to facilitate this collaboration covering key aspects of data collection, annotation, and model validation strategies. We highlight challenges in this area stemming from small and imbalanced datasets, the need to balance non-glamorous data work and stakeholder priorities, and closed data sharing practices. Stemming from these findings, we discuss avenues for further work in developing and deploying hate speech detection systems for low-resource languages.},

keywords = {computational journalism, ICTD, social computing},

pubstate = {published},

tppubtype = {inproceedings}

}

Niranjan Kumar, Irfan Essa, Sehoon Ha

Graph-based Cluttered Scene Generation and Interactive Exploration using Deep Reinforcement Learning Proceedings Article

In: Proceedings International Conference on Robotics and Automation (ICRA), pp. 7521-7527, 2022.

Abstract | Links | BibTeX | Tags: ICRA, machine learning, reinforcement learning, robotics

@inproceedings{2021-Kumar-GCSGIEUDRL,

title = {Graph-based Cluttered Scene Generation and Interactive Exploration using Deep Reinforcement Learning},

author = {Niranjan Kumar and Irfan Essa and Sehoon Ha},

url = {https://doi.org/10.1109/ICRA46639.2022.9811874

https://arxiv.org/abs/2109.10460

https://arxiv.org/pdf/2109.10460

https://www.kniranjankumar.com/projects/5_clutr

https://kniranjankumar.github.io/assets/pdf/graph_based_clutter.pdf

https://youtu.be/T2Jo7wwaXss},

doi = {10.1109/ICRA46639.2022.9811874},

year = {2022},

date = {2022-05-01},

urldate = {2022-05-01},

booktitle = {Proceedings International Conference on Robotics and Automation (ICRA)},

journal = {arXiv},

number = {2109.10460},

pages = {7521-7527},

abstract = {We introduce a novel method to teach a robotic agent to interactively explore cluttered yet structured scenes, such as kitchen pantries and grocery shelves, by leveraging the physical plausibility of the scene. We propose a novel learning framework to train an effective scene exploration policy to discover hidden objects with minimal interactions. First, we define a novel scene grammar to represent structured clutter. Then we train a Graph Neural Network (GNN) based Scene Generation agent using deep reinforcement learning (deep RL), to manipulate this Scene Grammar to create a diverse set of stable scenes, each containing multiple hidden objects. Given such cluttered scenes, we then train a Scene Exploration agent, using deep RL, to uncover hidden objects by interactively rearranging the scene.

},

keywords = {ICRA, machine learning, reinforcement learning, robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

Karan Samel, Zelin Zhao, Binghong Chen, Shuang Li, Dharmashankar Subramanian, Irfan Essa, Le Song

Learning Temporal Rules from Noisy Timeseries Data Journal Article

In: arXiv preprint arXiv:2202.05403, 2022.

Abstract | Links | BibTeX | Tags: activity recognition, machine learning

@article{2022-Samel-LTRFNTD,

title = {Learning Temporal Rules from Noisy Timeseries Data},

author = {Karan Samel and Zelin Zhao and Binghong Chen and Shuang Li and Dharmashankar Subramanian and Irfan Essa and Le Song},

url = {https://arxiv.org/abs/2202.05403

https://arxiv.org/pdf/2202.05403},

year = {2022},

date = {2022-02-01},

urldate = {2022-02-01},

journal = {arXiv preprint arXiv:2202.05403},

abstract = {Events across a timeline are a common data representation, seen in different temporal modalities. Individual atomic events can occur in a certain temporal ordering to compose higher level composite events. Examples of a composite event are a patient's medical symptom or a baseball player hitting a home run, caused distinct temporal orderings of patient vitals and player movements respectively. Such salient composite events are provided as labels in temporal datasets and most works optimize models to predict these composite event labels directly. We focus on uncovering the underlying atomic events and their relations that lead to the composite events within a noisy temporal data setting. We propose Neural Temporal Logic Programming (Neural TLP) which first learns implicit temporal relations between atomic events and then lifts logic rules for composite events, given only the composite events labels for supervision. This is done through efficiently searching through the combinatorial space of all temporal logic rules in an end-to-end differentiable manner. We evaluate our method on video and healthcare datasets where it outperforms the baseline methods for rule discovery.

},

keywords = {activity recognition, machine learning},

pubstate = {published},

tppubtype = {article}

}

Chengzhi Mao, Lu Jiang, Mostafa Dehghani, Carl Vondrick, Rahul Sukthankar, Irfan Essa

Discrete Representations Strengthen Vision Transformer Robustness Proceedings Article

In: Proceedings of International Conference on Learning Representations (ICLR), 2022.

Abstract | Links | BibTeX | Tags: computer vision, google, machine learning, vision transformer

@inproceedings{2022-Mao-DRSVTR,

title = {Discrete Representations Strengthen Vision Transformer Robustness},

author = {Chengzhi Mao and Lu Jiang and Mostafa Dehghani and Carl Vondrick and Rahul Sukthankar and Irfan Essa},

url = {https://iclr.cc/virtual/2022/poster/6647

https://arxiv.org/abs/2111.10493

https://research.google/pubs/pub51388/

https://openreview.net/forum?id=8hWs60AZcWk},

doi = {10.48550/arXiv.2111.10493},

year = {2022},

date = {2022-01-28},

urldate = {2022-04-01},

booktitle = {Proceedings of International Conference on Learning Representations (ICLR)},

journal = {arXiv preprint arXiv:2111.10493},

abstract = {Vision Transformer (ViT) is emerging as the state-of-the-art architecture for image recognition. While recent studies suggest that ViTs are more robust than their convolutional counterparts, our experiments find that ViTs trained on ImageNet are overly reliant on local textures and fail to make adequate use of shape information. ViTs thus have difficulties generalizing to out-of-distribution, real-world data. To address this deficiency, we present a simple and effective architecture modification to ViT's input layer by adding discrete tokens produced by a vector-quantized encoder. Different from the standard continuous pixel tokens, discrete tokens are invariant under small perturbations and contain less information individually, which promote ViTs to learn global information that is invariant. Experimental results demonstrate that adding discrete representation on four architecture variants strengthens ViT robustness by up to 12% across seven ImageNet robustness benchmarks while maintaining the performance on ImageNet.},

keywords = {computer vision, google, machine learning, vision transformer},

pubstate = {published},

tppubtype = {inproceedings}

}

Steven Hickson, Karthik Raveendran, Irfan Essa

Sharing Decoders: Network Fission for Multi-Task Pixel Prediction Proceedings Article

In: IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3771–3780, 2022.

Abstract | Links | BibTeX | Tags: computer vision, google, machine learning

@inproceedings{2022-Hickson-SDNFMPP,

title = {Sharing Decoders: Network Fission for Multi-Task Pixel Prediction},

author = {Steven Hickson and Karthik Raveendran and Irfan Essa},

url = {https://openaccess.thecvf.com/content/WACV2022/papers/Hickson_Sharing_Decoders_Network_Fission_for_Multi-Task_Pixel_Prediction_WACV_2022_paper.pdf

https://openaccess.thecvf.com/content/WACV2022/supplemental/Hickson_Sharing_Decoders_Network_WACV_2022_supplemental.pdf

https://youtu.be/qqYODA4C6AU},

doi = {10.1109/WACV51458.2022.00371},

year = {2022},

date = {2022-01-01},

urldate = {2022-01-01},

booktitle = {IEEE/CVF Winter Conference on Applications of Computer Vision},

pages = {3771--3780},

abstract = {We examine the benefits of splitting encoder-decoders for multitask learning and showcase results on three tasks (semantics, surface normals, and depth) while adding very few FLOPS per task. Current hard parameter sharing methods for multi-task pixel-wise labeling use one shared encoder with separate decoders for each task. We generalize this notion and term the splitting of encoder-decoder architectures at different points as fission. Our ablation studies on fission show that sharing most of the decoder layers in multi-task encoder-decoder networks results in improvement while adding far fewer parameters per task. Our proposed method trains faster, uses less memory, results in better accuracy, and uses significantly fewer floating point operations (FLOPS) than conventional multi-task methods, with additional tasks only requiring 0.017% more FLOPS than the single-task network.},

keywords = {computer vision, google, machine learning},

pubstate = {published},

tppubtype = {inproceedings}

}

Niranjan Kumar, Irfan Essa, Sehoon Ha

Cascaded Compositional Residual Learning for Complex Interactive Behaviors Proceedings Article

In: Sim-to-Real Robot Learning: Locomotion and Beyond Workshop at the Conference on Robot Learning (CoRL), arXiv, 2022.

Abstract | Links | BibTeX | Tags: reinforcement learning, robotics

@inproceedings{2022-Kumar-CCRLCIB,

title = {Cascaded Compositional Residual Learning for Complex Interactive Behaviors},

author = {Niranjan Kumar and Irfan Essa and Sehoon Ha},

url = {https://arxiv.org/abs/2212.08954

https://www.kniranjankumar.com/ccrl/static/pdf/paper.pdf

https://youtu.be/fAklIxiK7Qg

},

doi = {10.48550/ARXIV.2212.08954},

year = {2022},

date = {2022-01-01},

urldate = {2022-01-01},

booktitle = {Sim-to-Real Robot Learning: Locomotion and Beyond Workshop at the Conference on Robot Learning (CoRL)},

publisher = {arXiv},

abstract = {Real-world autonomous missions often require rich interaction with nearby objects, such as doors or switches, along with effective navigation. However, such complex behaviors are difficult to learn because they involve both high-level planning and low-level motor control. We present a novel framework, Cascaded Compositional Residual Learning (CCRL), which learns composite skills by recursively leveraging a library of previously learned control policies. Our framework learns multiplicative policy composition, task-specific residual actions, and synthetic goal information simultaneously while freezing the prerequisite policies. We further explicitly control the style of the motion by regularizing residual actions. We show that our framework learns joint-level control policies for a diverse set of motor skills ranging from basic locomotion to complex interactive navigation, including navigating around obstacles, pushing objects, crawling under a table, pushing a door open with its leg, and holding it open while walking through it. The proposed CCRL framework leads to policies with consistent styles and lower joint torques, which we successfully transfer to a real Unitree A1 robot without any additional fine-tuning.},

keywords = {reinforcement learning, robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

- Categories

- Publications