Paper in IEEE WACV (2015): “Finding Temporally Consistent Occlusion Boundaries using Scene Layout”

Citation

[bibtex key= 2015-Raza-FTCOBUSL]

Abstract

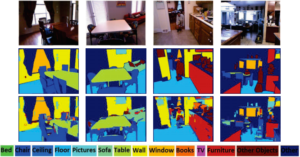

We present an algorithm for finding temporally consistent occlusion boundaries in videos to support the segmentation of dynamic scenes. We learn occlusion boundaries in a pairwise Markov random field (MRF) framework. We first estimate the probability of a spatiotemporal edge being an occlusion boundary by using appearance, flow, and geometric features. Next, we enforce occlusion boundary continuity in an MRF model by learning pairwise occlusion probabilities using a random forest. Then, we temporally smooth boundaries to remove temporal inconsistencies in occlusion boundary estimation. Our proposed framework provides an efficient approach for finding temporally consistent occlusion boundaries in video by utilizing causality, redundancy in videos, and semantic layout of the scene. We have developed a dataset with fully annotated ground-truth occlusion boundaries of over 30 videos (∼5000 frames). This dataset is used to evaluate temporal occlusion boundaries and provides a much-needed baseline for future studies. We perform experiments to demonstrate the role of scene layout and temporal information for occlusion reasoning in video of dynamic scenes.

- Additional Details: Temporal Occlusion Boundaries

- Presented at IEEE Winter Conference on Application of Computer Vision (WACV) 2015, Waikoloa Beach, HI, January 6-9, 2015.