Award-winning paper in ICML 2024 on “VideoPoet: A large language model for zero-shot video generation.”

Citation

VideoPoet: A large language model for zero-shot video generation Best Paper Proceedings Article

In: Proceedings of International Conference on Machine Learning (ICML), 2024.

Awarded the Best Paper Award by ICML 2024. More details at the Project Website.

Abstract

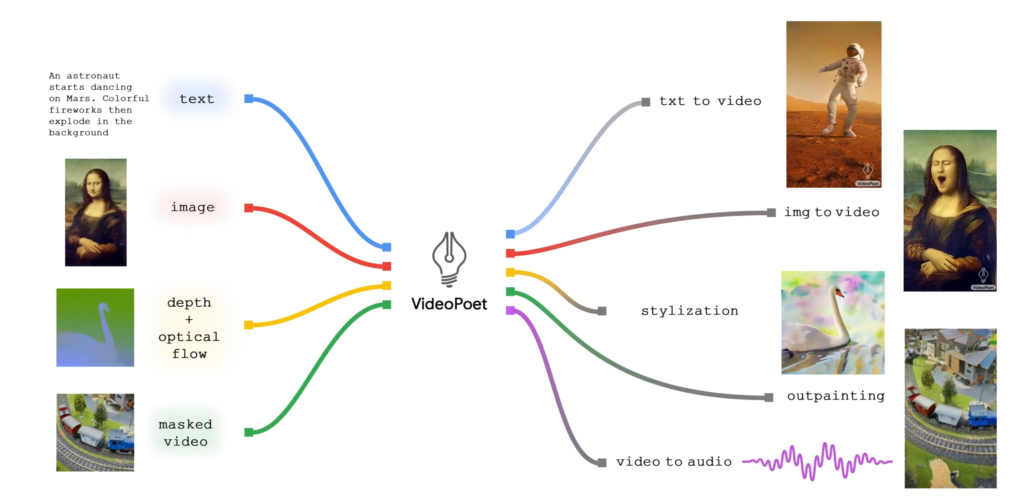

We present VideoPoet, a language model capable of synthesizing high-quality video, with matching audio, from a large variety of conditioning signals. VideoPoet employs a decoder-only transformer architecture that processes multimodal inputs -- including images, videos, text, and audio. The training protocol follows that of Large Language Models (LLMs), consisting of two stages: pretraining and task-specific adaptation. During pretraining, VideoPoet incorporates a mixture of multimodal generative objectives within an autoregressive Transformer framework. The pretrained LLM serves as a foundation that can be adapted for a range of video generation tasks. We present empirical results demonstrating the model's state-of-the-art capabilities in zero-shot video generation, specifically highlighting VideoPoet's ability to generate high-fidelity motions. Project page: http://sites.research.google/videopoet/

Wow, this is amazing! Reading about VideoPoet and its zero-shot video generation is truly mind-blowing. I’m especially impressed by its ability to weave together text, images, and audio so seamlessly – it feels like the future of storytelling is about to get a whole lot more accessible. How do you envision artists using this technology in new and unexpected creative ways?